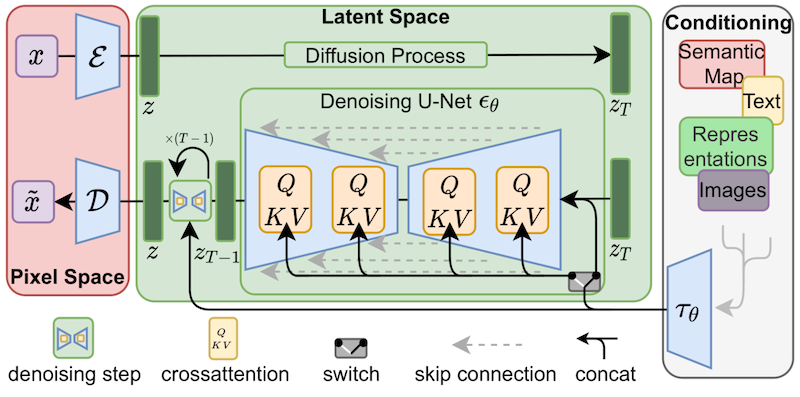

Cross Attention Control implementation based on the code of the official stable diffusion repository : r/StableDiffusion

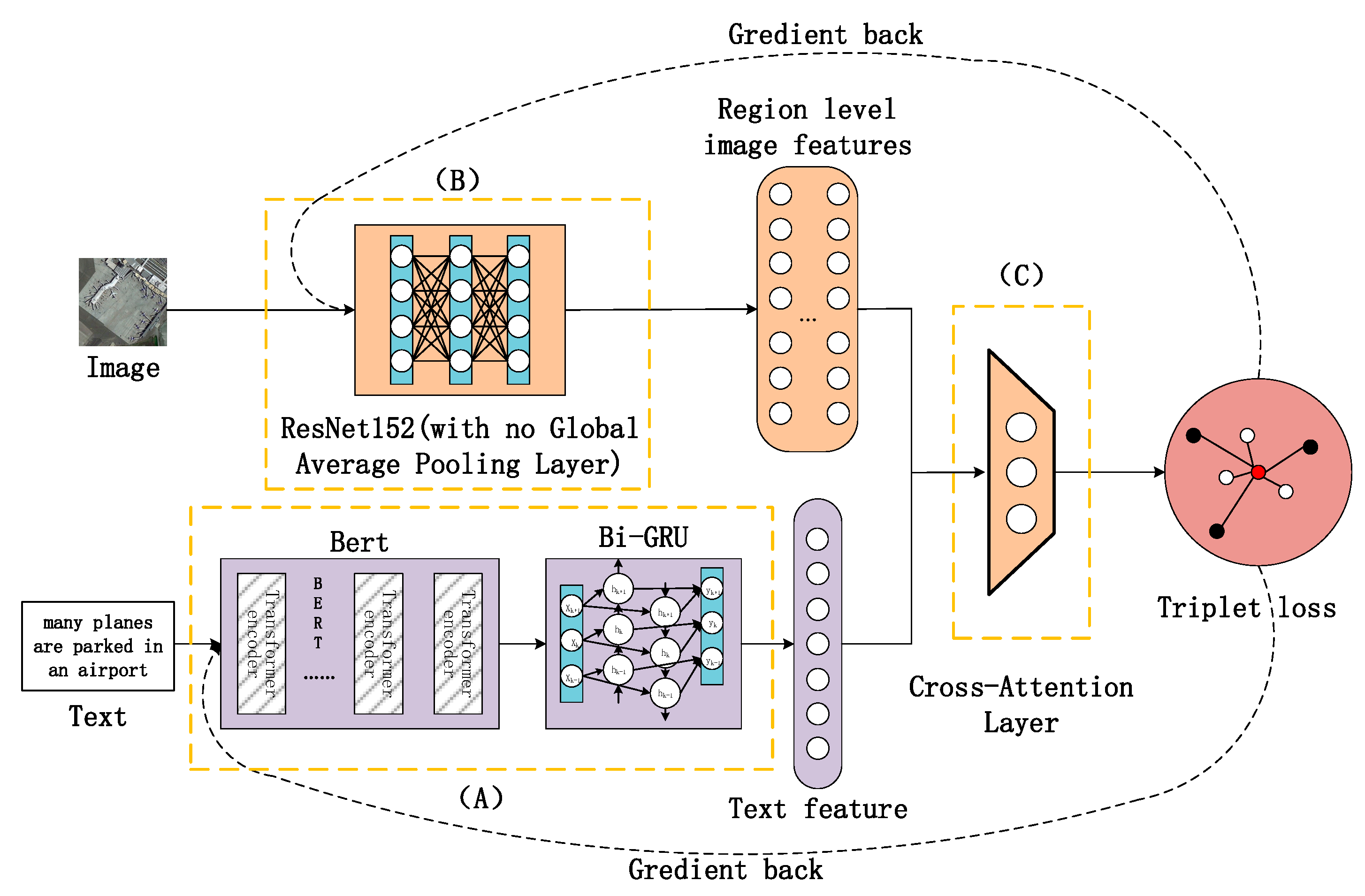

Applied Sciences | Free Full-Text | A Cross-Attention Mechanism Based on Regional-Level Semantic Features of Images for Cross-Modal Text-Image Retrieval in Remote Sensing

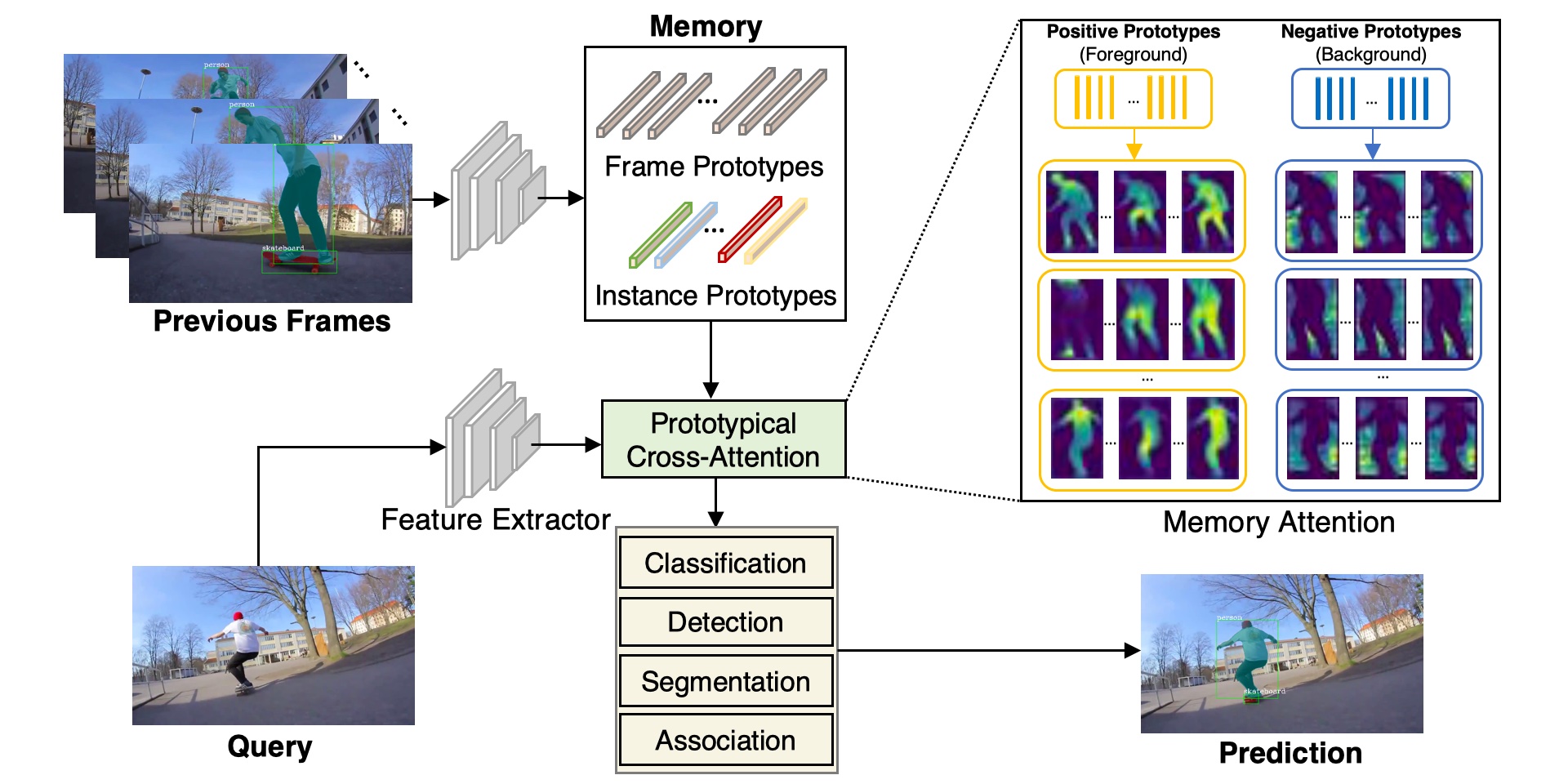

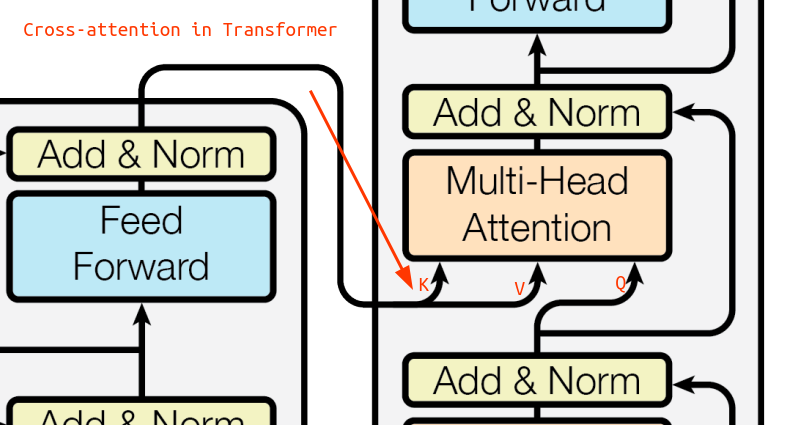

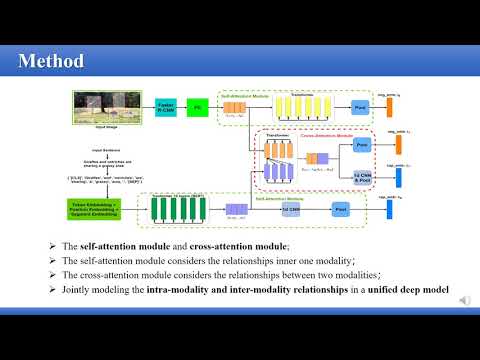

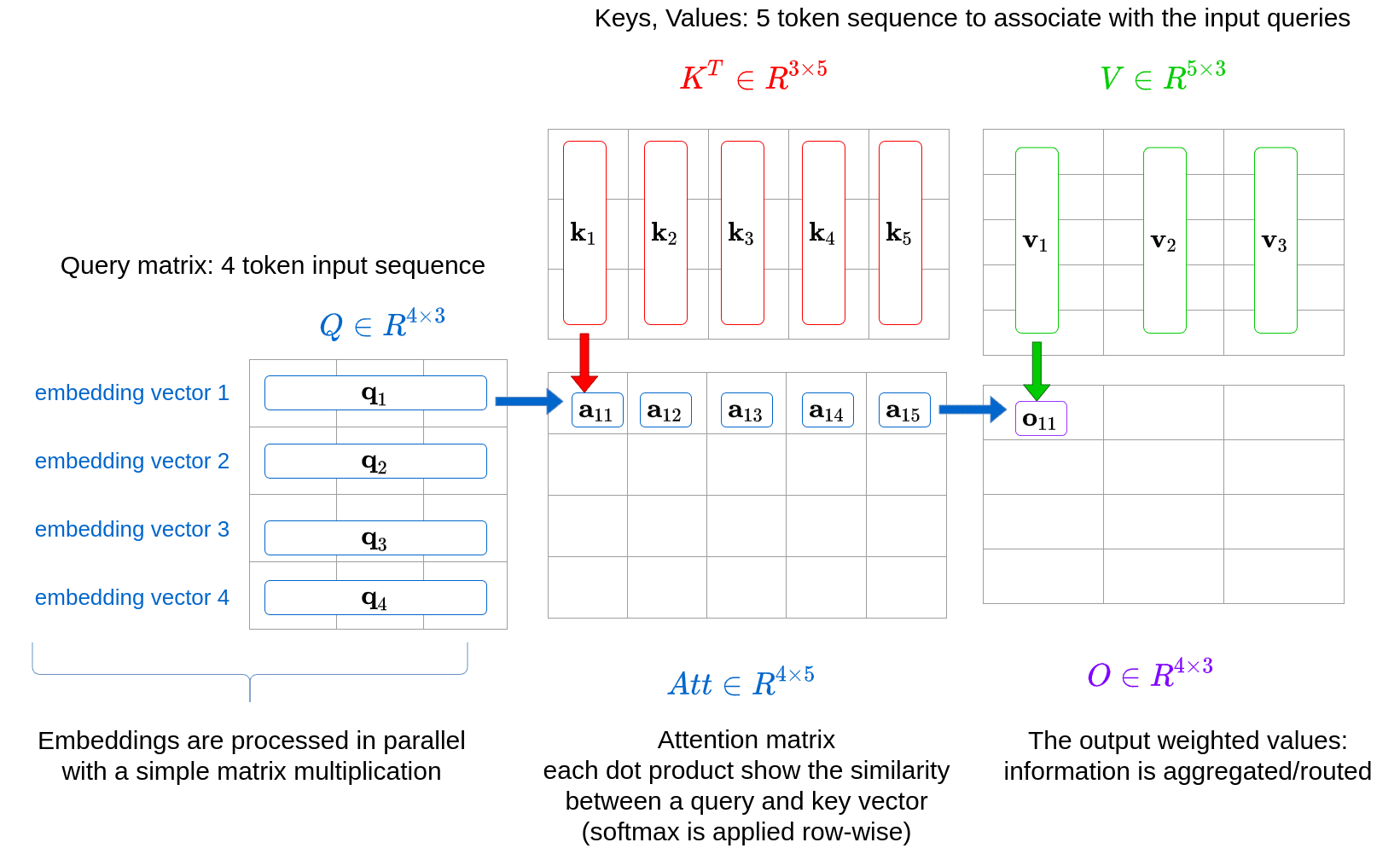

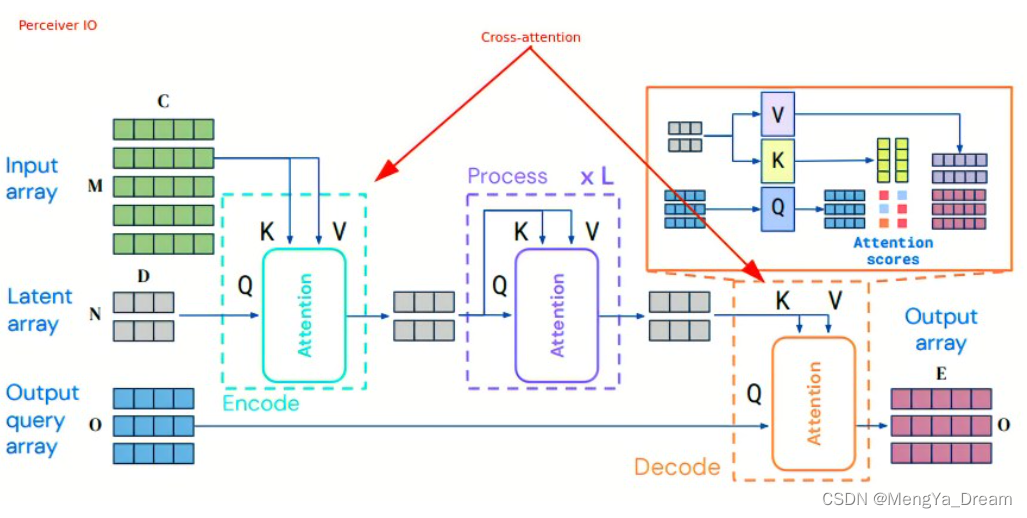

Transformer is All You Need: They Can Do Anything! | AI-SCHOLAR | AI: (Artificial Intelligence) Articles and technical information media

Applied Sciences | Free Full-Text | A Cross-Attention Mechanism Based on Regional-Level Semantic Features of Images for Cross-Modal Text-Image Retrieval in Remote Sensing

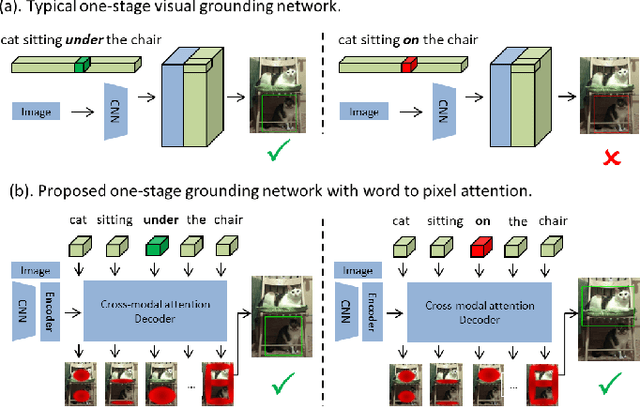

Cross-Graph Attention Enhanced Multi-Modal Correlation Learning for Fine-Grained Image-Text Retrieval | Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval

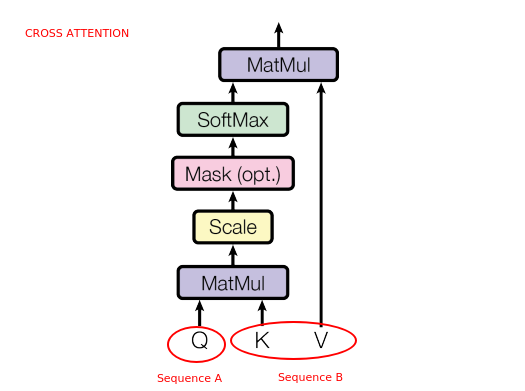

Multiscale Dense Cross-Attention Mechanism with Covariance Pooling for Hyperspectral Image Scene Classification

![PDF] CAT: Cross Attention in Vision Transformer | Semantic Scholar PDF] CAT: Cross Attention in Vision Transformer | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab70c5e1a338cb470ec39c22a4f10e0f19e61edd/4-Figure2-1.png)