python - What are logits? What is the difference between softmax and softmax_cross_entropy_with_logits? - Stack Overflow

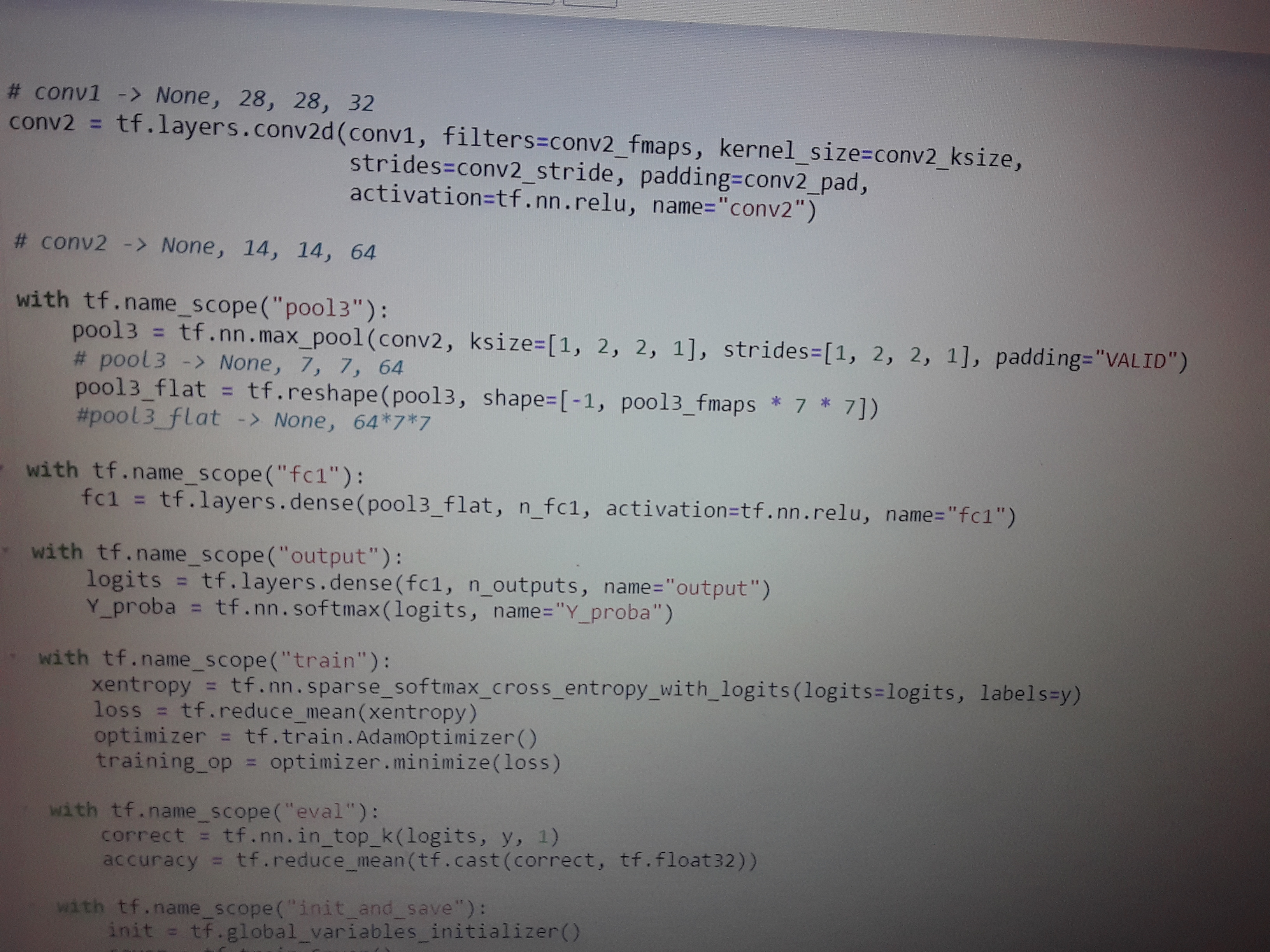

bug report: shouldn't use tf.nn.sparse_softmax_cross_entropy_with_logits to calculate loss · Issue #3 · AntreasAntoniou/MatchingNetworks · GitHub

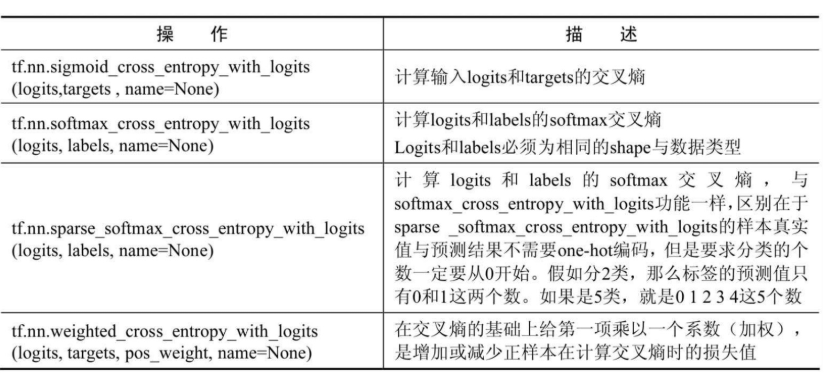

tf.nn.sparse_softmax_cross_entropy_with_logits 和tf.nn.softmax_cross_entropy_with_logits区别(转载)_dxz_tust的博客-CSDN博客

对于tf.nn.sparse_softmax_cross_entropy_with_logits (logits=y,labels=tf.argmax(y_,1))的研究_阿言在学习的博客-CSDN博客

![Summaries from custom estimator - Hands-On Machine Learning on Google Cloud Platform [Book] Summaries from custom estimator - Hands-On Machine Learning on Google Cloud Platform [Book]](https://www.oreilly.com/api/v2/epubs/9781788393485/files/assets/f0b30c65-9d1a-4d65-9e46-f6b8bdde557a.png)